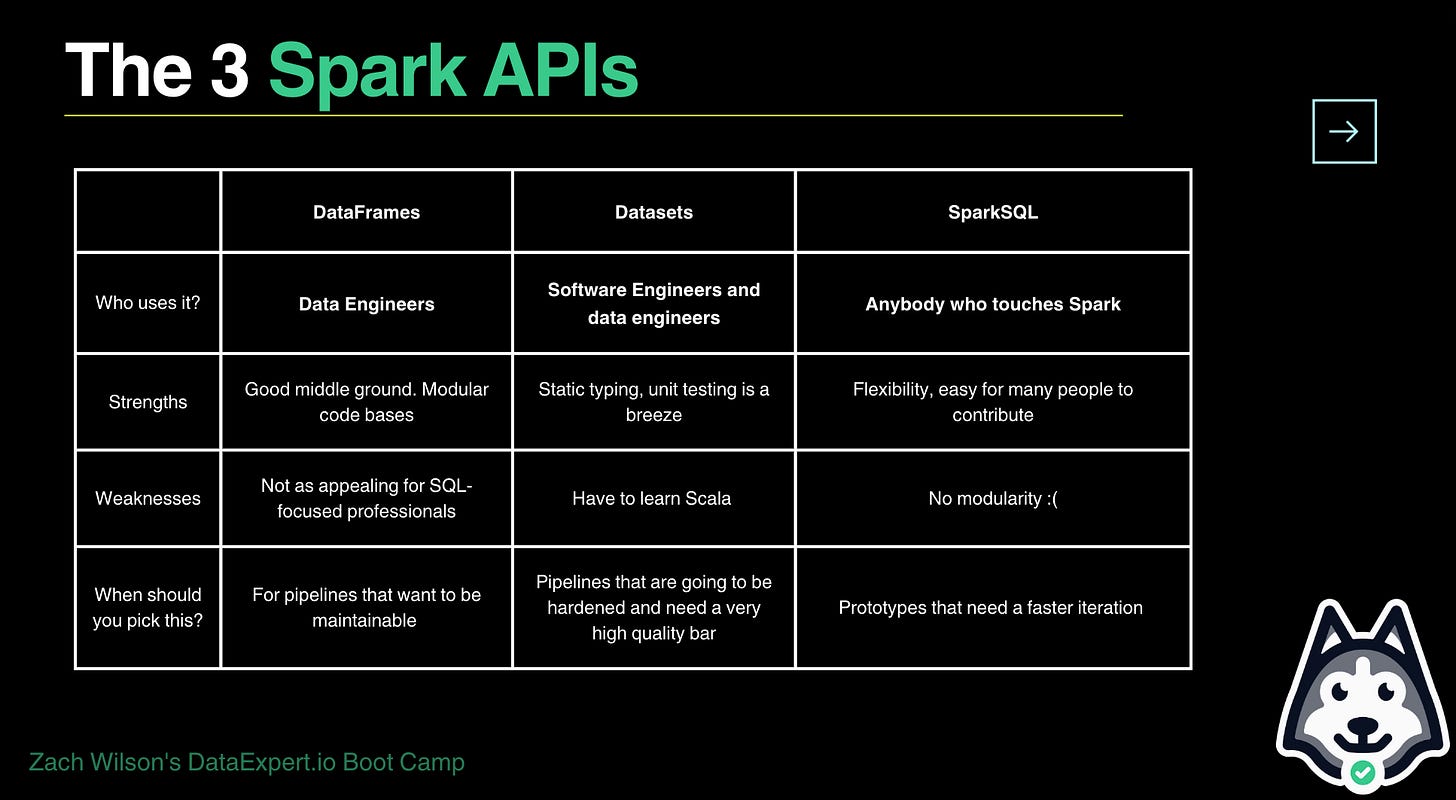

Spark offers so many different APIs and languages that it can be overwhelming which way is “best.”

In this article I will be discussing the tradeoffs between each since there’s a lot of dogma and misinformation out there about it!

The SparkSQL API

SQL APIs are data scientists and analys…

Keep reading with a 7-day free trial

Subscribe to DataEngineer.io Newsletter to keep reading this post and get 7 days of free access to the full post archives.