Whenever you’re building a data system, there’s a million things to consider. Gone are the days of just shoving everything into MongoDB and calling it “web scale.”

In this newsletter, we’ll be going over the considerations you should be thinking about when building out large scale data systems.

Systems have different needs and functionality

Data systems can be living and breathing in real-time like the Uber app with a data latency of milliseconds. Or they can be incredibly slow to update like the US census with a data latency of ten years!

Why doesn’t Uber design their system like the US Census? I think it has something to do with the fact that ordering an Uber 10 years ahead of time isn’t classified as a “normal human behavior.”

Okay, but what about the other way around? Why doesn’t the US Census design their data system like Uber? Wouldn’t having extremely up-to-date information on the population be beneficial?

For the US Census, the benefit of real-time data isn’t strong enough for managing the complexity. Imagine every single mortuary, hospital, and mid-wife would need an app to say, “yep this person died” or “yep this person was born.” Even if we set aside privacy concerns, getting that adopted across the entire country would be extremely cumbersome without that much benefit.

Now that we’ve established that both data systems have pretty good reasons for why they do things the way they do.

Determining your systems needs

When architecting a data system, there are three needs that are the most critical in determining which technologies you should select:

Latency

If your data system needs to be extremely low latency (<10 seconds as a cutoff), your system needs to be architected as a “push” system instead of a “pull” system.

Push systems work by sending data to the data store as it is generated. Another name for this is publish and subscribe. Common technologies in push systems are Apache Kafka, Apache Flink, Spark Structured Streaming, Apache Pinot, and Click House.

Pull systems work by periodically querying the source system and bringing a batch of data to a data store. Generally speaking, the interval between data pulls is greater than 10 seconds. This makes pull systems not suitable for low-latency systems. Common technologies in pull systems are Apache Iceberg, Apache Spark, Snowflake, BigQuery, Apache Airflow (or other orchestrators like Prefect, Mage and Dagster)

Correctness

Data quality is often an important piece of a data system as well. Correctness constraints are connected very closely with ACID compliance.

ACID compliance is a powerful tool to maintain data correctness. This guarantees that there aren’t “partial” or “incomplete” transactions and that all reads depending on those transactions have to wait. Fully ACID compliant databases are the pinnacle of data correctness. ACID compliance is more common in “pull” architectures than “push” architectures, although it can be implemented in both.

An opposing ideology to ACID is BASE which says that the data is available but the correctness is only guaranteed “eventually.” Generally speaking, if correctness is a very high priority, choosing ACID systems over BASE systems will be the case.

Scalability

Scalable data systems are systems that allow for partitioning of the data set. Good examples here in the NoSQL family are Cassandra, MongoDB, and DynamoDB. SQL databases that are capable of being sharded like MySQL can also function in a similar-but-different way to the NoSQL class of databases.

Keeping in mind that a lot of data engineering queries don’t care about these constraints because low-latency queries in the batch data pipeline world don’t really matter.

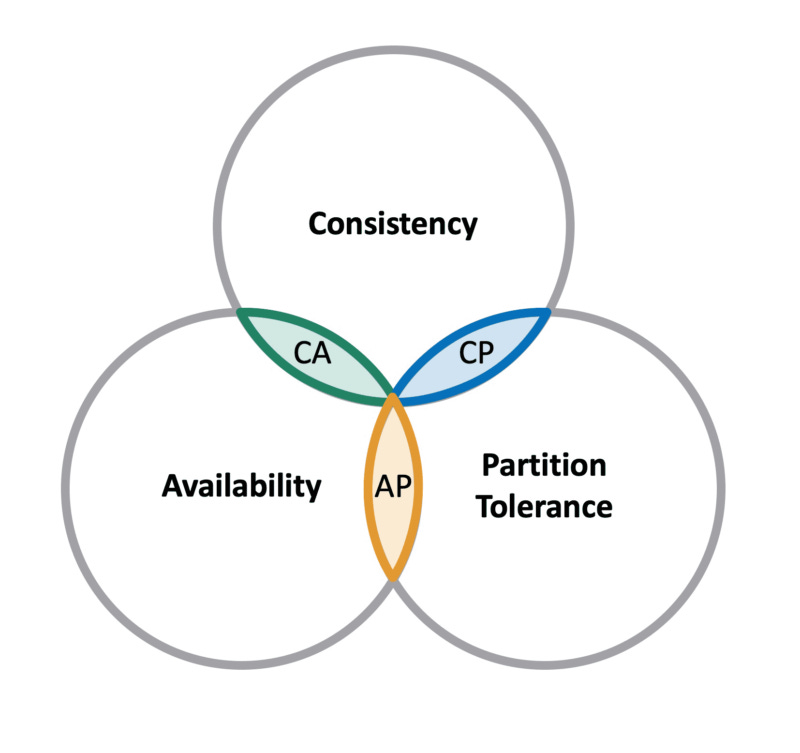

Scalability and correctness often come at a tradeoff. This tradeoff is illustrated very nicely with CAP theorem. “Eventually consistent” systems like Cassandra will return the correct result given enough time.

According to CAP theorem you generally have 3 types of data stores

Consistent and Available (i.e. CA) systems (like Postgres)

These systems don’t tolerate partitioning, which ultimately means they will have scaling issues. They’re generally ACID compliant and handle correctness extremely well!

Availability and Partition tolerant (i.e. AP) systems (like Cassandra)

These systems scale very well but consistency happens over time. AP systems are used when the actual value of the data doesn’t matter a lot and returning a value (which might not be the most up-to-date) is more important

Consistent and Partition tolerant (i.e. CP) systems (like MongoDB)

MongoDB does it differently than Cassandra. Whenever an update is propagating, Mongo decides to not return the old value and sacrifices availability so the data can be as consistent as possible.

How do you pick between push and pull architectures?

Push vs pull is like the Pepsi vs Coke of the data architecture world. In my experience in big tech, I watched some of the most important systems move from pull architectures to push ones. I’ve also watched some of these migrations fail spectacularly!

Push architectures are the software engineers best friend. You only process the data as it arrives and no weird reprocessing of data.

Pull architectures are the data engineers best friend. You process data in a defined batch which you can quantify as “normal” or “abnormal” with data quality checks on the entire batch.

This brings me to the Lambda vs Kappa architecture debate.

Data engineers don’t like push as much because the quality guarantees are pretty bad. Software engineers don’t like pull because the latency is bad.

There are three ways to reconcile this:

Lambda architecture

Have a push-based pipeline leveraging Kafka and Flink/Spark Streaming that optimizes for latency

Have another pull-based pipeline that leverages Iceberg and Spark that optimizes for correctness

When you use this architecture, the pain you feel is in complexity. Unless you use fancy frameworks like Apache Beam that allow you to switch a pipeline between batch and streaming modes.

When I worked at Netflix, we thought Beam was going to be a silver bullet to our problems in cybersecurity. The issue we found with it was the higher level abstraction made performance tuning much more difficult so we opted for Spark and Flink which was much more code but had easier daily maintenance

Kappa architecture

Have a push-based pipeline leveraging Kafka and Flink/Spark Streaming that optimizes for latency

Have the ability to “replay” events to the streaming job so you get backfill capabilities without having to hold onto everything in expensive storage like Kafka. This replay ability has been added to the new “cold” data stores Iceberg, Delta Lake and Hudi.

Kappa architecture makes up in simplicity what it loses in correctness. Although the new event replay abilities make the correctness tradeoff much easier to bear since backfill capabilities can restate bad data!

Pull-only architecture

Have a pull-based pipeline that leverages Iceberg and Spark that optimizes for correctness with great data quality checks

As a data engineer in big tech, this is the most common architecture because low latency constraints are actually pretty rare!

What type of data stores should you have when architecting your systems?

Your large scale data systems often have the following components:

A large scale, sharded source system that has A LOT of data in A LOT of places

At Facebook, this was millions of MySQL databases across the entire globe.

A data lake (usually Iceberg, Delta Lake, Hudi or Hive)

A low-latency analytical stores (usually Druid)

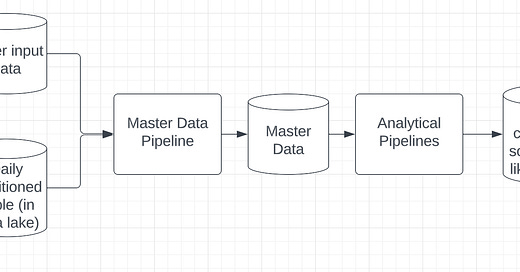

The steps to getting extremely high quality analytical data are as follows:

To get all the data from the sharded locations you need a “daily snapshot” task that queries all the shards to put the entire data set back together again in the data lake.

Take the daily snapshot data along with any other input data in the lake that you need to create high quality master data. Make sure to implement the write-audit-publish pattern when writing out your master data because a lot of people will rely on it and publishing bad data makes your company sad!

Once you have your master data, you create an analytical pipeline that does aggregations on top of it and uploads that data to a low-latency store like Druid.

You have analysts read the Druid data into dashboards or integrate it back into the app for customers to view!

Determining the right technology for the task at hand can feel intimidating. Learning how to balance latency, correctness, and scalability together will make you feel like a more confident engineer!

You can learn more about how to balance all of these things in depth in the DataExpert.io academy! You can use code ARCHITECTURE25 at checkout to get 25% off!

What other topics would you like to see me talk about in these newsletters? Anything you’d like to add to this discussion? I love talking about architecture!

Hey Zach, excellent article!

I love how you can distill such complex concepts into a single article! I was wondering, in the midst of all these technical concepts, why not also write an article for more senior DEs / leaders who are in the position of having to evaluate their team's individual performance? For example, what measurable KPIs should they consider? How to create a job scorecard to evaluate technical performances?

Hi Zach, Postgres has partitioned tables. What do you mean by: "Like Postgres - These systems don’t tolerate partitioning"