How to data model correctly: Kimball vs One Big Table

What is the right way to do things?

Kimball data modeling has been the gold standard in the data engineering industry for decades now! This isn’t because of a lack of innovation in the data modeling space either. It’s mostly from the fact that Kimball nailed it pretty well.

Kimball data modeling

Let’s first go over Kimball and how things look using those methodologies:

Data should be as normalized as it can be

Normalized meaning: minimizing duplication of columns and maximizing integrity constraints through things like foreign keys (i.e. FKs) and primary keys (i.e. PKs)

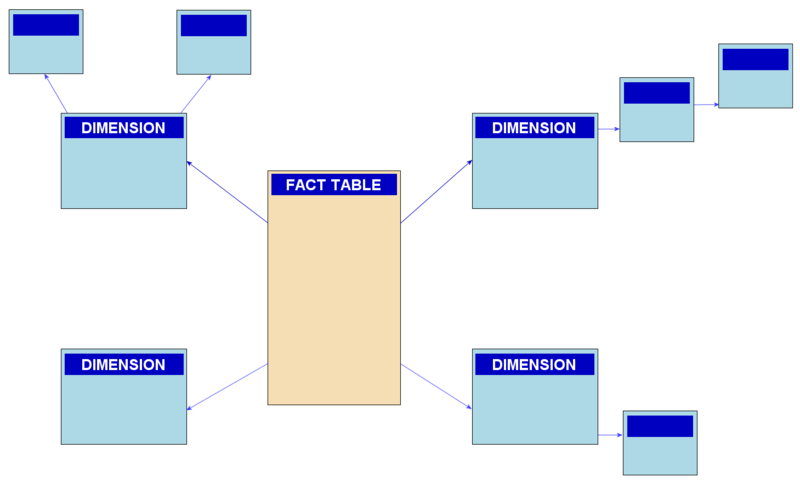

Data should be split into facts and dimensions tables with fact tables being the higher-volume, event-focused data (i.e. the what) and dimensions being the lower-volume, entity-focused data (i.e. the who)

Kimball data modeling is very powerful because you can use fact and dimension data to answer very complicated questions about the relationships of the who and the what inside your data. It also has enforced integrity that offers guaranteed quality!

Where Kimball falls short

In data lake architectures, you lose a lot of the notions of primary keys and foreign keys. You mostly have files in the cloud that are moved around without as much of a “babysitting” database management system.

The data lake architecture also introduced the notion of a thing called shuffle. Shuffle happens in distributed computing environments when you’re trying to JOIN two data sets that are both pretty large (> 10gbs each).

Shuffle is both a blessing and a curse when it comes to distributed computing. It allows for JOINs to happen that weren’t possible before on a single machine but these joins can be painfully inefficient.

Kimball data modeling still shines very brightly in data lake environments when the dimensional data that you bring in with JOIN can be fit in a broadcast JOIN in Spark. This allows the JOIN to happen without shuffle and can be done very efficiently. The constraint is that the dimensional data needs to be small (< 10 GBs per day).

When you lose out on broadcast JOIN as an option, Kimball data modeling starts to look significantly less efficient than it once did. Now every time an analyst wants to answer a question, they have to submit a job that does a great deal of shuffling.

One of the important things in the data lake environment is the sort order of the data table. A sorted fact table vs an unsorted one can mean a ten to one hundred times difference in cloud costs! Kimball data modeling that can’t leverage broadcast join will end up shuffling the data and ruining your sort order!

Enter The One Big Table model

One big table data modeling sounds like a joke in some regards. The name reminds me of the “god controller” in full-stack development. Why would we have a table that has everything in it? Is that really the best abstraction that we can come up with?

The tenets of One Big Table

You should model dimensions cumulatively and make sure they’re deduped

Regenerate your dimensions every day with daily partitioned data and snapshots

Fact data should be added to these dimensions as ARRAY<STRUCT> data types

Use array functions like REDUCE and TRANSFORM in Presto to analyze data without shuffling at all!

To “recreate” the fact data use things like CROSS JOIN UNNEST (in Presto) or LATERAL VIEW EXPLODE (in Spark). The key thing here is the UNNEST/EXPLODE will keep the sort order of your data

You’ll find that analysts will be initially very skeptical and annoyed by these new data structures because there’s a learning curve. After they get over the learning curve, they become delighted by:

How fast their queries run

How much more in-depth their analyses can be

I followed this philosophy when I was working at Airbnb on pricing and availability. We moved all the pricing data into a deduped listing-level table instead of an exploded-out listing-night level table and we saw intense gains in efficiency across the warehouse!

Should you use Kimball or One Big Table?

Here are the things to consider when choosing Kimball or One Big Table:

Data Volume

If your data volume is small, Kimball will still be the better way to go because it has the highest integrity guarantees and you don’t really have to worry about shuffling.

As the data volume grows and especially if both sides of your common JOINs are >10 gigabytes, considering One Big Table data modeling could reduce the amount of shuffling and increase your query efficiencies quite a bit!

Technical skills of downstream users

Are you data analysts willing to learn new things beyond basic SQL? If not, Kimball is going to be a better option.

Remember that you can give them a short retention, exploded view of the data as a middle ground as well.

Data practices at the company

Is your company known for having good data quality standards? One Big Table doesn’t have as many “built-in” quality constraints as Kimball data modeling does. So you’ll need to be thinking about quality from the start when using this new paradigm.

That’s all I got today on data modeling, one big table, and Kimball. This is a very exciting area of data engineering that I hope keeps evolving!

What things do you consider when doing data modeling? How can we improve our practices to be as efficient and fast as possible?

Please share this with your friends who are interested in data modeling! You can get 20% off my boot camp or academy with code ZACH20 at checkout!

I really enjoy your linkedin content! One note - the kimball methodology pushes to denormalize data in the dimension tables. He acknowledges this is a hot topic, but modelers should almost always reduce the urge to normalize. He says the trade off in simplicity is worth the duplicated space. He permits outrigger dimensions, but says they should be the exception, not the rule.

Agree, disagree? Thanks!

- Noah

Where is data vault?